Photo by UX Indonesia on Unsplash

A guide to Usability Testing

A step closer to building designs with enhanced user experience

What is Usability testing?

Usability testing is an evaluative user research technique that allows key stakeholders and the project team to understand better how people interact with a product. Usability testing can be done during the design, development, or after the product has been released. During a usability test, participants are required to perform specific tasks with the product and provide feedback.

Usability testing research methods can be conducted remotely or in person. The types are as follows:

User interviews

Unmoderated usability testing

User interviews

It is critical to ask the right questions during a User Interview. You get what you ask for, as the saying goes! Because the researcher's time during an interview is limited and there is usually so much to cover, asking all possible questions is not an option. So, knowing which questions to ask and which to avoid is just as important as knowing how to ask them.

![]()

Prior to the User Interview

This is the stage when all of the research preparations are made. The following deliverables are produced as part of this phase:

Discussion Guide - research questions are prepared here, though what you write may not always be what you ask during interviews.

Interview Schedule

Roles and responsibilities, for example, notetakers, moderators, translators, and so on.

Scenarios List

A list of test materials, if any, are required, such as design prototypes, competition websites, and so on.

Reliance on stakeholders

Forms of consent and disclosure, etc.

Exit survey, which is mostly closed-ended questions

During the User Interview cycle

Whether the interviews are for exploratory or evaluative research, the ability to ask the right questions is crucial here. Let's explore some examples of how to run an effective interview.

Different types of questions

i) Warm-up question –it is important to get the participant talking and comfortable. For example, you can start by asking, "Do you prefer coffee or tea?"

ii) Begin the investigation with open questions.

Explain your experience

How many/how much...

What is the cause of...

When was the last time you...

How do you...

iii) Task-based questions

Show us how you do it...

What motivates you to do that...

What preparations do you need to make...

iv) Recall a previous experience

Could you please share your most recent experience with...

Tell me everything you remember about the last time you used...

What was the most noteworthy aspect of...

Consider a real-life situation in which this could have been useful...

v) Beliefs and attitudes

What are your thoughts on...

What do you enjoy the most about...

What do you despise the most...

In what way could this be useful...

If you were to consider the advantages of... what would they be...

What are your thoughts on...

vi) Inquiry questions

Please tell me more about that.

Could you please elaborate?

Why did you go about it that way...

Why did you think that...

Let me repeat what you said. Is this what you meant?

What makes you think that...

If you think about it again...

vii) Closing remarks

Do you have any questions you'd like to ask...

Is there anything else you'd like to say?

![Best interview questions to ask candidates [and great interview tips]](https://resources.workable.com/wp-content/uploads/2022/08/110interview-questions-and-asnwers.png)

After the User Interview

After you conduct user interviews, it is time to review the data you collected to use it in your design. Debriefing and topline summary are important items in this step.

Debriefing

Debriefing aids in determining what went well during the session. What can be improved, prioritized and so on.

Preparation and distribution of topline summary

This keeps stakeholders up to date on current findings and aids in making initial project and product decisions.

Updating the Research Schedule

This step deals with dropouts, changes in participant availability and so on. Stakeholders will always have questions that will be triggered during the interview. As a result, a researcher acting as a moderator should be on the lookout for those questions and try to incorporate them into the interview if time allows. Try to be as broad as possible with open questions. And remember that there is always more than you can say.

Unmoderated Usability Testing Types

With regard to its relationship to usability testing, the term "unmoderated" refers to research participants using the product when no one is watching or interacting with them. Unmoderated usability testing can be done in two ways:

Unmoderated in-person usability testing

The product is used by the participants at a predetermined physical location. No one is watching the participants while they use the product. After the user session is finished, the researcher may record and investigate the interaction and any comments made during the product usage. This method of usability testing is less popular than remote unmoderated usability testing because it requires the presence of researchers and participants at a fixed time and location.

Unmoderated remote usability testing

This method employs a user research platform based on the Internet. The participants and the researcher do not need to be in the same place at the same time to use this method. They could be anywhere in the world where an internet connection is available. The participants complete the tasks, and their interactions with the product are recorded and uploaded to a server. The researchers later on, in their own time, access the screen recordings of the interactions and interpret the feedback from the participants. This feedback may include face recording as well as verbal think-aloud comments.

How to Conduct an Unmoderated Usability Test

To successfully conduct unmoderated remote usability testing, the following preparation is required:

1. Define the usability testing objectives.

What do stakeholders want to learn?

How would the findings benefit the company?

Is the research method appropriate for all the objectives?

Are all stakeholders in agreement regarding the goal statements?

Which of the objectives can be included in future rounds of usability testing?

2. Determine the participant profile

Number of targeted profiles and personas

Age group, gender, nationality, and income of the target audience

Relevant behavioral attributes, such as what they do, like, and dislike

Sample size: the number of participants in each profile

3. Screen and shortlist candidates

Prepare a list of screener questions to help you select your candidates.

Determine which answers should qualify or disqualify candidates for participation.

Level of comfort in thinking aloud

Include a question to assess feedback articulation ability.

Collect contact information to distribute the usability test and reach out if necessary.

Request that participants consent to participate and agree to be recorded.

Request consent from participants to store their personal information, if any.

Include the type of incentive, the amount, and the payment method.

4. Choose a platform for user research

Choose a remote usability testing platform based on the information you want to collect and the products that participants will be testing.

Is the platform compatible with the research method you will conduct?

Is the platform capable of attracting participants?

Is the platform capable of testing test material?

Does the platform support the devices that the test must be run on, such as a computer or a mobile device?

Is the platform video-centric?

5. Gather test materials

Determine and decide what you want the participants to test with the following options:

Wireframes

Design prototypes

If necessary, the participants may need to sign a non-disclosure agreement.

The availability of test materials as a dependency must be included in the usability testing plan.

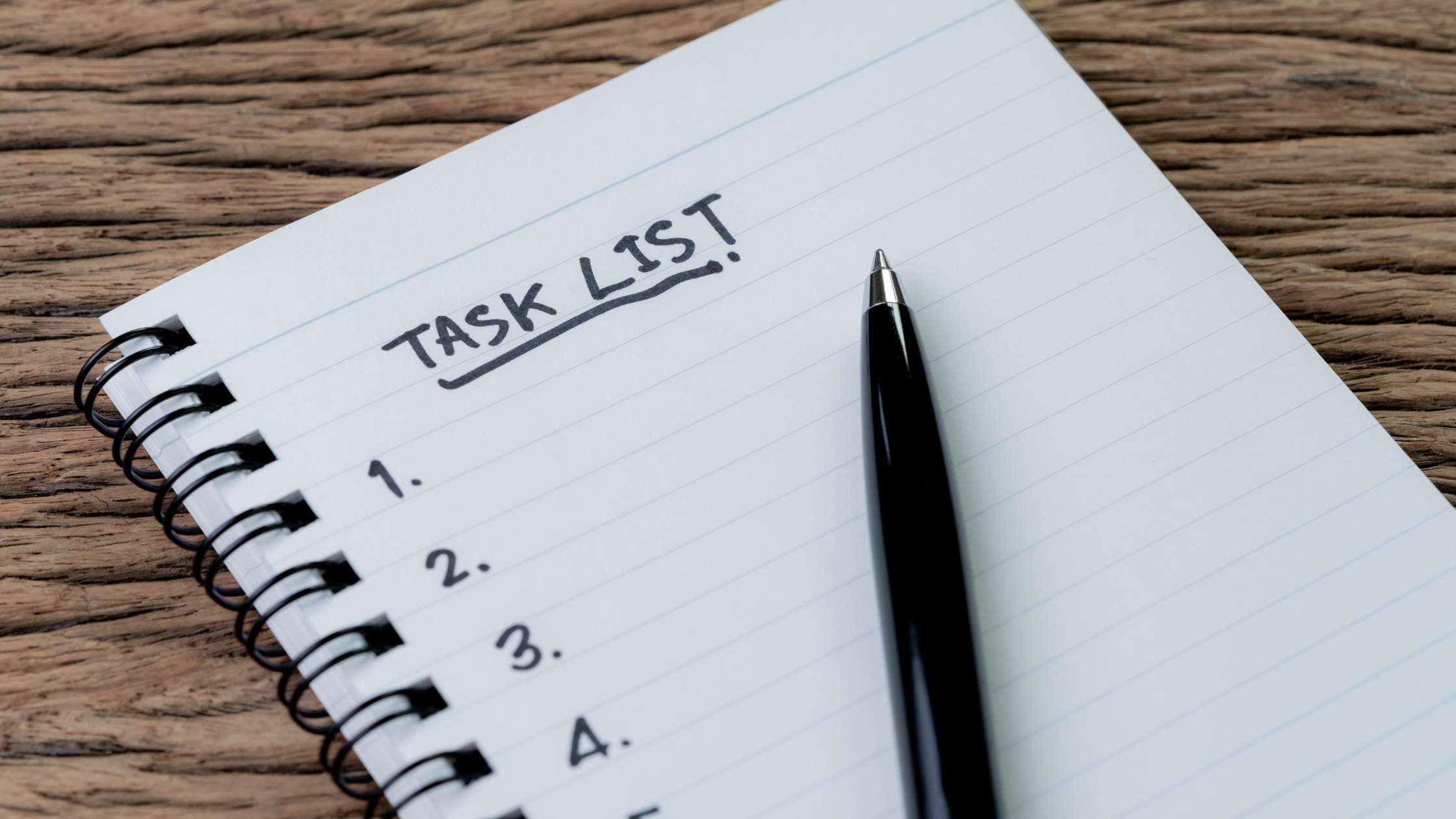

- Create a list of tasks to be completed as well as a list of usability metrics.

Make a list of the tasks that the participants would be responsible for. Create the tasks based on what users would accomplish with the product.

Limit yourself to 5 or 7 tasks at a time.

Each task must correspond to the study's objectives.

Tasks must be clearly worded and unambiguous.

Clearly defined success criteria.

Each task must specify an end state so that participants know the task has been completed.

The sequence in which tasks are to be presented is referred to as task flow.

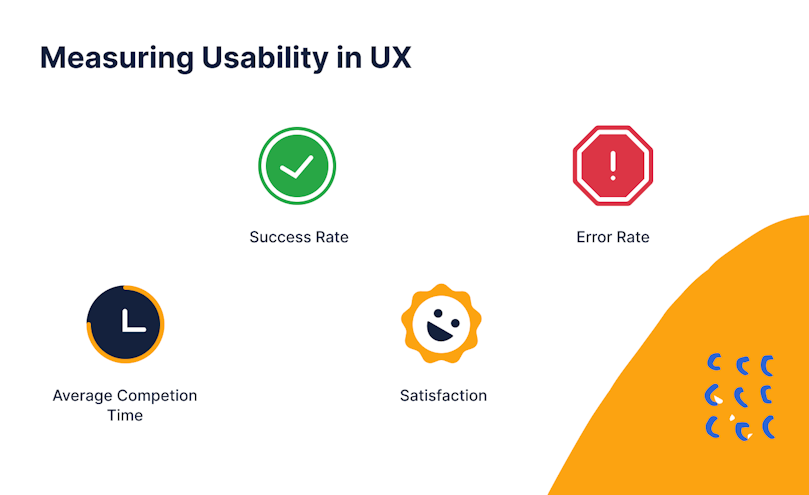

Determine usability metrics for each task.

7. Determine usability metrics for each task.

Failure or success

Time to complete the task

Time to first click or tap

Count of clicks and taps

Count of swipes

Paths of navigation, such as the number of pages or screens

A task's number of retries

8. Plan questions to be asked after the tasks are finished

Survey questions can be asked at the end of any task and immediately after completion. Survey questions can be of various types:

Unanswered questions

Single or multiple choice

Likert scale of 5 to 7 points

Dropdown

Matrix or ranking

Inquire about recall, task difficulty, and any additional information relevant to providing background information about participants, such as other similar experiences they have had.

- Perform a test run prior to the test launch.

Experimenting with the test yourself, also known as a dry run, helps to refine it.

Dry run with internal and external participants

Determine whether or not there is a fatigue factor in completing the test.

Check to see if everything is working as it should. For example, prototypes are loading correctly and are the correct ones.

Fine-tune questions and include information that may have been overlooked.

If everything is in order, start the test.

Final thoughts

I hope that now you know about usability testing and the steps involved in two broad categories: user interviews and moderated and unmoderated user testing. Hopefully, this information will help you improve the user experience of your application and build effective designs